Could you tell difference between a non-native-English-speaking 13-year old Ukranian boy, and a computer program? On Saturday, at the Royal Society, one out of three human judges were fooled. So, it has been widely reported, the iconic Turing Test has been passed and a brave new era of Artificial Intelligence (AI) begins.

Not so fast. While this event marks a modest improvement in the abilities of so-called ‘chatbots’ to engage fluently with humans, real AI requires much more.

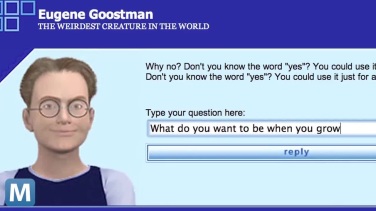

Here’s what happened. At a competition held in central London, thirty judges (including politician Lord Sharkey, computer scientist Kevin Warwick, and Red Dwarf actor Robert Llewellyn) interacted with ‘Eugene Goostman’ in a series of five-minute text-only exchanges. As a result, 33% of the judges (reports do not yet say which, though tweets implicate Llewellyn) were persuaded that ‘Goostman’ was real. The other 67% were not. It turns out that ‘Eugene Goostman’ is not a teenager from Odessa, but a computer program, a ‘chatbot’ created by computer engineers Vladimir Veselov and Eugene Demchenko. According to his creators, ‘Goostman’ was ‘born’ in 2001, owns a pet guinea pig, and has a gynaecologist father.

The Turing Test, devised by computer science pioneer and codebreaker Alan Turing, was proposed as a practical alternative to the philosophically challenging and possibly absurd question, “can machines think”. In one popular interpretation, a human judge interacts with two players – a human and a machine – and must decide which is which. A candidate machine passes the test when the judge consistently fails to distinguish the one from the other. Interactions are limited to exchanges of strings of text, to make the competition fair (more on this later; its also worth noting that Turing’s original idea was more complex than this, but lets press on). While there have been many previous attempts and prior claims about passing the test, the Goostman-bot arguably outperformed its predecessors, leading Warwick to noisily proclaim “We are therefore proud to declare that Alan Turing’s Test was passed for the first time on Saturday”.

Alan Turing’s seminal 1950 paper

This is a major overstatement which does grave disservice to the field of AI. While Goostman may represent progress of a sort – for instance this year’s competition did not place any particular restrictions on conversation topics – some context is badly needed.

An immediate concern is that Goostman is gaming the system. By imitating a non-native speaker, the chatbot can make its clumsy English expected rather than unusual. Hence its reaction to winning the prize: “I feel about beating the Turing test in quite convenient way”. And its assumed age of thirteen lowers expectations about satisfactory responses to questions. As Veselov put it “Thirteen years old is not too old to know everything and not too young to know nothing.” While Veselov’s strategy is cunning, it also shows that the Turing test is as much a test of the judges’ abilities to make suitable inferences, and to ask probing questions, as it is of the capabilities of intelligent machinery.

More importantly, fooling 33% of judges over 5 minute sessions was never the standard intended by Alan Turing for passing his test – it was merely his prediction about how computers might fare within about 50 years of his proposal. (In this, as in much else, he was not far wrong: the original Turing test was described in 1950.) A more natural criterion, as emphasized by the cognitive scientist Stevan Harnad, is for a machine to be consistently indistinguishable from human counterparts over extended periods of time, in other words to have the generic performance capacity of a real human being. This more stringent benchmark is still a long way off.

Perhaps the most significant limitation exposed by Goostman is the assumption that ‘intelligence’ can be instantiated in the disembodied exchange of short passages of text. On one hand this restriction is needed to enable interesting comparisons between humans and machines in the first place. On the other, it simply underlines that intelligent behaviour is intimately grounded in the tight couplings and blurry boundaries separating and joining brains, bodies, and environments. If Saturday’s judges had seen Goostman, or even an advanced robotic avatar voicing its responses, there would no question of any confusion. Indeed, robots that are today physically most similar to humans tend to elicit sensations like anxiety and revulsion, not camaraderie. This is the ‘uncanny valley’ – a term coined by robotics professor Masahiro Mori in 1970 (with a nod to Freud) and exemplified by the ‘geminoids’ built by Hiroshi Ishiguro.

Hiroshi Ishiguro and his geminoid. Another imitation game.

A growing appreciation of the importance of embodied, embedded intelligence explains why nobody is claiming that human-like robots are among us, or are in any sense imminent. Critics of AI consistently point to the notable absence of intelligent robots capable of fluent interactions with people, or even with mugs of tea. In a recent blog post I argued that new developments in AI are increasingly motivated by the near forgotten discipline of cybernetics, which held that prediction and control were at the heart of intelligent behaviour – not barefaced imitation as in Turing’s test (and, from a different angle, in Ishiguro’s geminoids). While these emerging cybernetic-inspired approaches hold great promise (and are attracting the interest of tech giants like Google) there is still plenty to be done.

These ideas have two main implications for AI. The first is that true AI necessarily involves robotics. Intelligent systems are systems that flexibly and adaptively interact with complex, dynamic, and often social environments. Reducing intelligence to short context-free text-based conversations misses the target by a country mile. The second is that true AI should focus not only on the outcome (i.e., whether a machine or robot behaves indistinguishably from a human or other animal) but also on the process by which the outcome is attained. This is why considerable attention within AI has always been paid to understanding, and simulating, how real brains work, and how real bodies behave.

How the leopard got its spots: Turing’s chemical basis of morphogenesis.

Turing of course did much more than propose an interesting but ultimately unsatisfactory (and often misinterpreted) intelligence test. He laid the foundations for modern computer science, he saved untold lives through his prowess in code breaking, and he refused to be cowed by the deep prejudices against homosexuality prevalent in his time, losing his own life in the bargain. He was also a pioneer in theoretical biology: his work in morphogenesis showed how simple interactions could give rise to complex patterns during animal development. And he was a central figure in the emerging field of cybernetics, where he recognized the deep importance of embodied and embedded cognition. The Turing of 1950 might not recognize much of today’s technology, but he would not have been fooled by Goostman.

[postscript: while Warwick &co have been very reluctant to release the transcript of Goostman’s 2014 performance, this recent Guardian piece has some choice dialogue from 2012, where Goostman polled at 28%, not far off Saturday’s 33%. This piece was updated on June 12 following a helpful dialog with Aaron Sloman].

Nice text! Let us not forget the widespread pattern-recognition test CAPTCHA (Completely Automated Public Turing test to tell Computers and Humans Apart) besides thinking much harder about complex tasks with embodied AI. Building a machine that engages in a human-like conversation, and thus passes the Turin test, is a type of intelligence test, recognizing patterns is another. Intelligence – as measured by diverse problem-solving performance – and consciousness are of course completely different things. But when it comes to minds and machines hopefully one day a solid theory of consciousness will provide strong arguments against solipsism – whereas there is probably never a definite theory for defining intelligence. One can only compare problem-solving performances between all sorts of systems (biological or not), and maybe come up with an Olympiad by combining several challenges.

Here is a good chat with that chatbot:

http://www.scottaaronson.com/blog/

Someday someone will pass it actually. But a limit is there. Please note the test doesn’t show whether it is conscious or not.

Probably soon better programs will come true. However it is like a Siri. “Intelligence with consciousness” will not come true like that. First consciousness is coming soon like “artificial intelligence”. It is possible to realize consciousness without passing Turing test.

The problem is whether it has consciousness or not.

Pingback: There’s more to geek-chic than meets the eye, but not in The Imitation Game | NeuroBanter

Pingback: Ex Machina: A shot in the arm for smart sci-fi | NeuroBanter